Linux remote monitoring tools are essential for ensuring the smooth operation and security of your Linux systems. These tools provide real-time insights into system performance, resource utilization, and potential issues, allowing you to proactively address problems before they impact your operations.

Table of Contents

From monitoring CPU usage and memory consumption to tracking network traffic and disk space, these tools empower administrators to gain a comprehensive understanding of their systems’ health. By leveraging advanced features like alerts, reporting, and integration with other management tools, Linux remote monitoring solutions enable efficient system management and proactive problem resolution.

Introduction to Linux Remote Monitoring

Remote monitoring of Linux systems is crucial for ensuring their smooth operation, identifying potential issues, and preventing downtime. It allows administrators to proactively manage their systems from anywhere, regardless of physical location.

Remote monitoring provides numerous benefits, including:

Benefits of Remote Monitoring

- Early Issue Detection: Remote monitoring tools can detect issues like high CPU utilization, disk space depletion, or service failures before they impact users or applications.

- Proactive Maintenance: By identifying potential problems early, administrators can schedule maintenance or apply updates before issues escalate, reducing downtime and improving system stability.

- Improved Security: Remote monitoring tools can track suspicious activity, identify security breaches, and provide alerts to prevent unauthorized access or data theft.

- Cost Savings: By reducing downtime and preventing issues from escalating, remote monitoring can save organizations money by minimizing lost productivity and potential data loss.

- Enhanced System Performance: Remote monitoring tools can provide valuable insights into system performance, helping administrators identify bottlenecks and optimize resource allocation.

Types of Linux Systems That Can Be Monitored Remotely

Remote monitoring is applicable to a wide range of Linux systems, including:

- Servers: Web servers, database servers, application servers, and other critical systems can be monitored remotely to ensure their availability and performance.

- Workstations: Remote monitoring can be used to track the health of individual workstations, identify resource constraints, and ensure user productivity.

- Embedded Systems: Linux-based embedded systems, such as routers, firewalls, and network appliances, can be monitored remotely for performance, security, and fault detection.

- Cloud Environments: Remote monitoring is essential for managing cloud-based Linux infrastructure, ensuring the performance and availability of virtual machines and containers.

Key Features of Linux Remote Monitoring Tools

A comprehensive Linux remote monitoring tool provides a range of essential features to ensure optimal system performance, security, and stability. These features work in unison to offer a holistic view of your Linux infrastructure, enabling proactive problem resolution and efficient resource management.

Real-time Data Collection and Analysis

Real-time data collection is crucial for effective monitoring, as it provides a continuous stream of information about your Linux systems. This data includes metrics such as CPU utilization, memory usage, disk space, network traffic, and process activity. Real-time analysis allows you to identify trends, anomalies, and potential issues as they occur, enabling timely intervention and preventing performance degradation or security breaches.

Alert Notifications and Reporting

Alert notifications are essential for proactive problem resolution. When predefined thresholds are breached or specific events occur, the monitoring tool triggers alerts, notifying administrators via email, SMS, or other communication channels. This ensures that issues are detected and addressed promptly, minimizing downtime and potential damage. Comprehensive reporting provides detailed insights into system performance and historical data, facilitating capacity planning, trend analysis, and root cause investigation.

Popular Linux Remote Monitoring Tools

Linux remote monitoring tools are essential for system administrators to ensure the stability and performance of their systems. These tools provide real-time insights into system health, resource utilization, and potential issues. By leveraging these tools, administrators can proactively identify and address problems before they escalate, reducing downtime and improving overall system reliability.

Popular Linux Remote Monitoring Tools

Here’s a comparison of some of the top Linux remote monitoring tools:

| Tool | Key Features | Pricing Model | Pros | Cons |

|---|---|---|---|---|

| Nagios |

|

Open source (free) |

|

|

| Zabbix |

|

Open source (free) |

|

|

| Prometheus |

|

Open source (free) |

|

|

| Datadog |

|

Subscription-based |

|

|

| New Relic |

|

Subscription-based |

|

|

Setting Up and Configuring a Linux Remote Monitoring Tool: Linux Remote Monitoring Tools

Setting up and configuring a Linux remote monitoring tool is a crucial step in ensuring the health and stability of your systems. This process involves selecting a suitable tool, installing it on your monitoring server, and configuring it to monitor your target systems. The specific steps may vary depending on the tool you choose, but the general principles remain the same.

Installing and Configuring a Remote Monitoring Tool

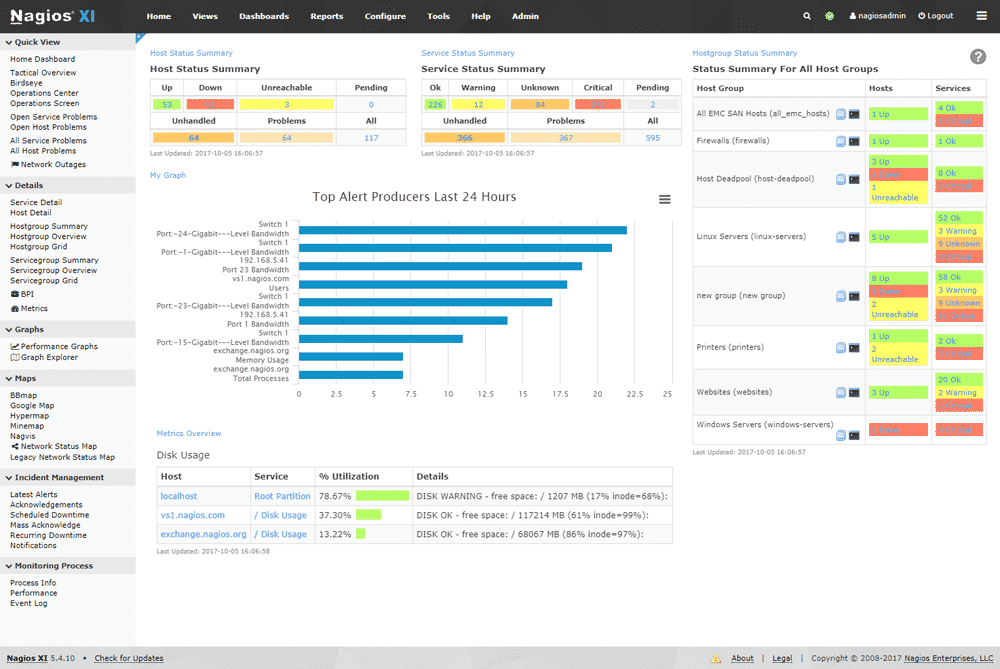

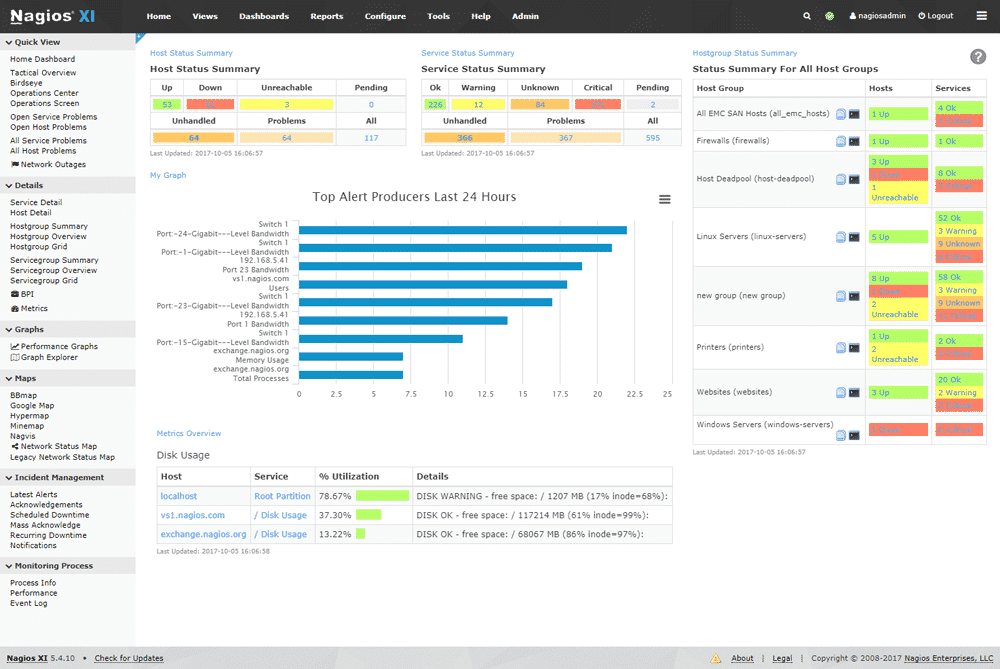

This section will guide you through the process of installing and configuring a chosen remote monitoring tool, using Nagios as an example. Nagios is a widely-used open-source monitoring tool known for its robust features and flexibility.

- Download and Install Nagios: The first step is to download the Nagios software from the official website. You can choose the appropriate version based on your operating system and requirements. After downloading, follow the installation instructions provided in the documentation. These instructions usually involve unpacking the downloaded package, running a setup script, and configuring the necessary settings.

- Configure Nagios: Once installed, you need to configure Nagios to meet your specific monitoring needs. This involves defining the hosts and services you want to monitor, setting up notification methods, and customizing other settings. Nagios uses configuration files written in a specific syntax. You can find detailed documentation and examples on the Nagios website to help you understand the configuration process.

- Install Plugins: Nagios uses plugins to check the status of various services and resources. These plugins are small programs that perform specific checks and report their findings to Nagios. You can find a wide range of plugins available for Nagios, covering various services and technologies. The installation process for plugins typically involves downloading the plugin from the Nagios website and installing it on the monitoring server. The specific installation instructions may vary depending on the plugin.

- Test and Verify: After installing and configuring Nagios, it’s crucial to test and verify that it’s working correctly. This involves running test checks against your target systems and ensuring that Nagios can successfully collect data and generate alerts. You can use the Nagios command-line interface or the web interface to perform these tests.

Defining Monitoring Targets and Setting Up Alerts

Defining monitoring targets and setting up alerts are essential for effectively using a remote monitoring tool. This process involves identifying the systems and services you want to monitor, setting up thresholds for different metrics, and configuring how alerts are triggered and delivered.

- Identify Monitoring Targets: Begin by identifying the systems and services you want to monitor. This could include servers, network devices, databases, web applications, and other critical infrastructure components. For each target, determine the specific metrics you want to track. These metrics could include CPU usage, memory utilization, disk space, network traffic, and service availability.

- Set Up Thresholds: Once you’ve identified the metrics you want to track, you need to define thresholds for each metric. These thresholds represent the acceptable limits for each metric. When a metric exceeds its threshold, Nagios triggers an alert. For example, you might set a threshold for CPU usage at 80%. If the CPU usage exceeds 80%, Nagios will trigger an alert.

- Configure Alerts: You need to configure how alerts are triggered and delivered. This involves specifying the conditions that trigger an alert, the recipients of the alert, and the method of delivery. For example, you can configure Nagios to send an email notification to your team if a server’s CPU usage exceeds 80%.

Integrating the Tool with Existing Infrastructure

Integrating a remote monitoring tool with your existing infrastructure is a crucial step in ensuring seamless operation. This process involves configuring the tool to communicate with your target systems and ensuring that it can collect data and generate alerts effectively.

- Configure Network Connectivity: The monitoring tool needs to be able to communicate with the target systems over the network. This involves configuring network settings on both the monitoring server and the target systems. You might need to configure firewalls to allow communication between the monitoring server and the target systems.

- Configure Authentication: The monitoring tool might need to authenticate with the target systems to access data and perform checks. This involves configuring user accounts and permissions on the target systems. You might also need to configure authentication methods such as SSH or Kerberos.

- Integrate with Other Tools: You might want to integrate the remote monitoring tool with other tools in your infrastructure. This could include logging tools, ticketing systems, or other monitoring tools. Integration can help you streamline your monitoring workflow and improve your overall monitoring efficiency.

Monitoring Key Performance Indicators (KPIs)

Effective monitoring of Linux systems involves keeping a close eye on crucial performance indicators (KPIs) that reveal the health and efficiency of your system. By tracking these KPIs, you can proactively identify potential issues, optimize performance, and ensure smooth system operation.

Key Performance Indicators

These KPIs provide insights into the resource usage and overall health of your Linux system. Regularly monitoring these metrics is essential for identifying potential bottlenecks, resource exhaustion, and performance degradation.

- CPU Utilization: Measures the percentage of time the CPU is actively processing tasks. High CPU utilization can indicate a workload that is exceeding the system’s processing capabilities, potentially leading to slowdowns and performance issues.

- Memory Usage: Reflects the amount of RAM being used by running processes. High memory usage can lead to excessive swapping, which significantly impacts system performance.

- Disk Space: Shows the amount of available storage space on your system’s hard drives. Insufficient disk space can hinder system operations and potentially lead to data loss.

- Network Traffic: Tracks the volume of data being transmitted and received over the network. High network traffic can indicate heavy network usage, potentially impacting system performance or indicating security breaches.

Interpreting and Analyzing KPIs

Understanding how to interpret and analyze these KPIs is crucial for identifying potential issues and taking corrective actions.

CPU Utilization

High CPU utilization, especially consistently near 100%, suggests that the system is under heavy load and may be struggling to keep up. This could be due to resource-intensive processes, system bottlenecks, or even malicious activities.

- Identifying the culprit: Use tools like

toporhtopto identify processes consuming the most CPU resources. This will help pinpoint the source of the high utilization. - Optimizing performance: If the high utilization is caused by resource-intensive processes, consider optimizing these processes, upgrading hardware, or distributing the workload across multiple servers.

Memory Usage

High memory usage, especially when approaching the system’s total RAM capacity, can indicate a potential memory leak or a process consuming an excessive amount of memory.

- Identifying memory leaks: Tools like

freeorvmstatcan help identify memory leaks. A steady increase in memory usage over time, even with no new processes being launched, can indicate a leak. - Addressing memory issues: If a process is consuming excessive memory, consider restarting the process, upgrading hardware, or optimizing the process to reduce its memory footprint.

Disk Space

Low disk space can lead to performance degradation, as the system may struggle to write new data or store temporary files.

- Monitoring disk space: Use tools like

dfto monitor disk space usage and identify partitions with limited space. - Managing disk space: Free up disk space by deleting unnecessary files, archiving data, or moving files to external storage. Consider upgrading to a larger hard drive or partitioning your disk more efficiently.

Network Traffic

High network traffic can impact system performance, especially if it’s sustained over long periods. It can also indicate potential security breaches or unauthorized access.

- Monitoring network traffic: Use tools like

iftoportcpdumpto monitor network traffic patterns and identify unusual spikes or high-volume connections. - Addressing network traffic issues: If the high traffic is due to legitimate activities, consider optimizing network configurations or upgrading network infrastructure. If suspicious traffic is detected, investigate potential security threats.

Troubleshooting and Problem Resolution

Remote monitoring tools are designed to provide insights into the health and performance of Linux systems, but occasional issues can arise, leading to disruptions in data collection or inaccurate reporting. Understanding common problems and effective troubleshooting techniques is crucial for ensuring reliable and consistent monitoring.

Common Issues Encountered During Remote Monitoring

Troubleshooting remote monitoring issues often involves identifying the root cause of the problem. Here are some common issues that can arise during remote monitoring:

- Network Connectivity Problems: Disruptions in network connectivity between the monitoring server and the target Linux system can lead to data loss or delayed updates. This could be due to network outages, firewall restrictions, or configuration errors on either end.

- Agent Configuration Errors: Incorrectly configured monitoring agents on the target Linux systems can result in incomplete or inaccurate data being collected. This might involve issues with agent installation, permissions, or communication settings.

- Monitoring Tool Configuration Errors: Misconfigured monitoring tools on the server side can lead to incorrect data interpretation, alerts, or reporting. This could involve incorrect thresholds, notification settings, or data visualization configurations.

- System Resource Constraints: Insufficient system resources, such as CPU, memory, or disk space, on either the monitoring server or the target Linux system can impact monitoring performance. This can lead to delays, dropped connections, or even system instability.

- Monitoring Agent Conflicts: Installing multiple monitoring agents on the same target system can lead to conflicts or interfere with each other’s functionality. This can cause data inconsistencies or unexpected behavior.

Troubleshooting Techniques and Solutions

Addressing remote monitoring issues often involves a combination of techniques and solutions. Here are some practical approaches:

- Verify Network Connectivity: Begin by ensuring a stable network connection between the monitoring server and the target Linux system. Use tools like

pingortracerouteto check network reachability and identify potential bottlenecks. - Review Agent Configuration: Carefully examine the configuration of the monitoring agents on the target systems. Ensure that they are properly installed, configured, and have the necessary permissions to collect data. Refer to the agent documentation for specific configuration guidelines.

- Inspect Monitoring Tool Configuration: Check the configuration settings of the monitoring tool on the server side. Verify thresholds, notification settings, data visualization options, and other relevant parameters. Make adjustments as needed to ensure accurate data interpretation and reporting.

- Monitor System Resources: Use tools like

top,htop, orfreeto monitor system resources on both the monitoring server and the target Linux systems. Identify any resource constraints that might be impacting monitoring performance and take steps to address them, such as increasing memory or disk space. - Analyze System Logs: Examine system logs on both the monitoring server and the target Linux systems for any error messages or warnings related to monitoring. These logs can provide valuable clues about the root cause of the issue.

- Isolate the Problem: If the issue persists, try isolating the problem by temporarily disabling or removing certain monitoring components or configurations. This can help pinpoint the specific source of the issue.

Role of Log Analysis and System Diagnostics, Linux remote monitoring tools

Log analysis and system diagnostics play a crucial role in troubleshooting remote monitoring issues. By examining system logs, you can gather valuable information about system events, errors, and warnings that may have contributed to the problem. This includes:

- Monitoring Agent Logs: Examine the logs generated by the monitoring agents on the target Linux systems. These logs can provide details about data collection, communication with the monitoring server, and any errors encountered.

- Monitoring Tool Logs: Review the logs generated by the monitoring tool on the server side. These logs can provide insights into data processing, alert generation, and reporting activities.

- System Logs: Analyze the general system logs on both the monitoring server and the target Linux systems. These logs can reveal information about system events, resource usage, and potential conflicts that might be affecting monitoring.

Tip: Regularly review and analyze system logs to proactively identify potential issues and address them before they escalate into major problems.

Troubleshooting Example

Consider a scenario where a remote monitoring tool fails to collect CPU utilization data from a target Linux system.

- Initial Steps: Begin by verifying network connectivity between the monitoring server and the target system. Use tools like

pingortracerouteto confirm network reachability. - Agent Configuration: Check the configuration of the monitoring agent on the target system. Ensure that it is properly installed, configured to collect CPU utilization data, and has the necessary permissions to access system resources.

- System Logs: Examine the logs generated by the monitoring agent and the target system. Look for any error messages or warnings related to CPU data collection or access permissions.

- Resource Constraints: Monitor system resources on the target system using tools like

toporhtop. Check if the CPU is heavily loaded, which could potentially interfere with data collection. - Problem Resolution: Based on the findings from the logs and resource monitoring, identify and address the root cause of the issue. This might involve adjusting the agent configuration, granting necessary permissions, or optimizing system resource usage.

Security Considerations

Remote monitoring tools, while providing valuable insights into system health and performance, inherently introduce security vulnerabilities that need to be addressed carefully.

Unauthorized access to sensitive system data can lead to data breaches, system compromise, and potential financial losses.

Linux remote monitoring tools are essential for keeping a watchful eye on your systems, ensuring everything runs smoothly. One valuable tool to consider is kvr software , which offers comprehensive monitoring capabilities, including performance metrics, resource utilization, and system health checks.

By integrating kvr software into your monitoring strategy, you can gain deeper insights into your Linux environment and proactively address potential issues before they escalate.

Secure Access Control

Secure access control is paramount for protecting sensitive data and ensuring that only authorized individuals can access and manage remote monitoring systems.

Implementing robust access control mechanisms, such as multi-factor authentication (MFA), strong passwords, and role-based access control (RBAC), is crucial for mitigating security risks.

- Multi-factor authentication (MFA): MFA adds an extra layer of security by requiring users to provide multiple forms of authentication, such as a password and a one-time code generated by a mobile app or hardware token. This makes it significantly harder for unauthorized individuals to gain access to the system, even if they have stolen a password.

- Strong passwords: Encourage users to create strong passwords that are at least 12 characters long and include a combination of uppercase and lowercase letters, numbers, and symbols. Password complexity requirements should be enforced, and users should be educated on best practices for password security.

- Role-based access control (RBAC): RBAC assigns different levels of access to users based on their roles and responsibilities within the organization. This ensures that users only have access to the data and resources they need to perform their jobs, minimizing the risk of unauthorized access and data leaks.

Data Encryption

Data encryption is essential for protecting sensitive information transmitted between the remote monitoring tool and the monitored system.

Encrypting data at rest and in transit ensures that even if the data is intercepted, it cannot be accessed without the appropriate decryption key.

- Data encryption at rest: This involves encrypting data stored on the monitored system and the remote monitoring server. This ensures that even if the system is compromised, the data remains inaccessible to unauthorized individuals.

- Data encryption in transit: This involves encrypting data while it is being transmitted between the monitored system and the remote monitoring tool. This protects the data from interception by malicious actors during network transmission.

Securing Remote Monitoring Tools and Data

Regularly updating remote monitoring tools and operating systems is crucial for patching security vulnerabilities and mitigating potential risks.

- Regular software updates: Software vendors regularly release security patches to address vulnerabilities discovered in their products. It is essential to apply these updates promptly to ensure that the remote monitoring tool is protected against known threats.

- Secure network configurations: Implement strong firewall rules to restrict access to the remote monitoring tool and the monitored system. Use network segmentation to isolate sensitive systems and applications from the rest of the network.

- Regular security audits: Conduct regular security audits to identify and address potential vulnerabilities in the remote monitoring system. This can include vulnerability scans, penetration testing, and security assessments.

- Implement strong access control policies: Define clear access control policies that specify who can access the remote monitoring system and what actions they are authorized to perform. This helps prevent unauthorized access and data manipulation.

- Use secure protocols: Use secure protocols such as SSH and HTTPS for communication between the remote monitoring tool and the monitored system. These protocols encrypt data during transmission, making it more difficult for attackers to intercept and decrypt sensitive information.

Best Practices for Effective Monitoring

Implementing a robust remote monitoring strategy for your Linux systems is crucial for ensuring optimal performance, stability, and security. By proactively monitoring your systems, you can identify potential issues before they escalate into major problems, minimize downtime, and ensure your applications and services remain available to users.

Regular Monitoring and Proactive Maintenance

Regular monitoring and proactive maintenance are essential for maintaining the health and performance of your Linux systems. A well-defined monitoring schedule allows you to identify potential issues early on, preventing them from escalating into major problems.

- Establish a Regular Monitoring Schedule: Define a regular monitoring schedule based on the criticality of your systems and the frequency of potential issues. For critical systems, consider hourly or even more frequent monitoring. Less critical systems might only require daily or weekly checks.

- Automate Monitoring Tasks: Automate as many monitoring tasks as possible to minimize manual effort and ensure consistency. Use scripting or monitoring tools to collect data, generate alerts, and perform routine maintenance tasks.

- Implement Proactive Maintenance: Proactive maintenance involves performing regular updates, patching vulnerabilities, and optimizing system resources. This approach minimizes the risk of unexpected downtime and improves system security.

Optimizing Monitoring Tool Configuration

Optimizing the configuration of your monitoring tools is essential for maximizing their effectiveness and minimizing resource consumption.

- Define Clear Monitoring Objectives: Clearly define your monitoring objectives to ensure you collect the right data and set appropriate thresholds. For example, if you are monitoring CPU utilization, define a threshold that triggers an alert when usage exceeds a certain percentage.

- Configure Alerts and Notifications: Set up appropriate alerts and notifications to inform you of potential issues. Configure different notification methods, such as email, SMS, or instant messaging, based on the severity of the issue.

- Optimize Data Collection: Minimize the amount of data collected to reduce the impact on system performance. Focus on collecting data that is essential for monitoring and troubleshooting.

- Use Data Aggregation and Filtering: Use data aggregation and filtering techniques to reduce the volume of data and make it easier to analyze. For example, aggregate data from multiple servers into a single dashboard or filter out irrelevant data.

Integration with Other Tools

Linux remote monitoring tools often play a crucial role in a broader system management strategy. Their effectiveness can be significantly enhanced by integrating them with other tools and platforms, creating a cohesive and automated ecosystem for system administration.

Integration with System Management Tools

Integrating Linux remote monitoring tools with other system management tools allows for a comprehensive and unified approach to system administration. This integration facilitates the exchange of data and enables the automation of tasks, ultimately streamlining workflows and enhancing overall efficiency.

- Configuration Management Tools (e.g., Ansible, Puppet, Chef): Integrating monitoring tools with configuration management tools allows for automated monitoring of system configurations and the detection of deviations from desired states. This ensures that changes made through configuration management tools are properly reflected in the monitoring environment.

- Ticketing Systems (e.g., Jira, Zendesk): Integrating monitoring tools with ticketing systems enables automated incident reporting and ticket creation based on predefined alert thresholds. This streamlines the incident management process and ensures that issues are addressed promptly.

- Log Management Tools (e.g., Graylog, ELK Stack): Integrating monitoring tools with log management tools provides a centralized platform for collecting, analyzing, and storing logs generated by monitored systems. This enables a holistic view of system behavior and facilitates troubleshooting by correlating log data with performance metrics.

Leveraging Automation and Orchestration

Automation and orchestration play a vital role in enhancing the efficiency and effectiveness of Linux remote monitoring. By automating tasks and orchestrating workflows, organizations can significantly reduce manual intervention, minimize errors, and optimize resource utilization.

- Automated Alerting and Notification: Automating alert and notification mechanisms ensures that relevant personnel are promptly informed of critical system events, allowing for timely intervention and preventing potential issues from escalating.

- Automated Remediation: In certain scenarios, automated remediation actions can be implemented based on predefined rules and thresholds. This allows for the automatic resolution of minor issues, reducing the need for manual intervention and minimizing downtime.

- Orchestration of Monitoring Workflows: Orchestrating monitoring workflows enables the automation of complex monitoring tasks, such as the collection and analysis of data from multiple sources, the generation of reports, and the triggering of specific actions based on predefined criteria.

Examples of Improved System Management Efficiency

The integration of Linux remote monitoring tools with other systems management tools can significantly improve overall system management efficiency in various ways:

- Proactive Problem Identification and Resolution: By correlating data from different sources, organizations can identify potential issues before they impact system performance or availability. This proactive approach enables timely intervention and prevents disruptions to critical services.

- Reduced Mean Time to Resolution (MTTR): Automating incident reporting, notification, and remediation processes significantly reduces the time required to identify and resolve issues. This translates into reduced downtime and improved service availability.

- Improved System Visibility and Control: Integrating monitoring tools with other systems management tools provides a unified view of system health, performance, and configuration. This comprehensive visibility enables organizations to make informed decisions regarding system management and resource allocation.

Future Trends in Linux Remote Monitoring

The landscape of Linux remote monitoring is constantly evolving, driven by technological advancements and changing IT needs. Emerging trends are shaping the future of this field, with significant implications for how we manage and optimize Linux systems.

Impact of Cloud Computing

Cloud computing has revolutionized the way we deploy and manage applications. As more organizations adopt cloud-based infrastructure, the need for effective remote monitoring tools that can seamlessly integrate with cloud environments becomes increasingly critical.

- Cloud-Native Monitoring Tools: These tools are specifically designed to monitor cloud-based applications and infrastructure, providing real-time insights into resource utilization, performance, and security. They leverage APIs and integrations with cloud providers to gather data and provide comprehensive visibility into cloud environments. Examples include Amazon CloudWatch, Google Cloud Monitoring, and Microsoft Azure Monitor.

- Hybrid Cloud Monitoring: As organizations move towards hybrid cloud architectures, the need for monitoring tools that can manage both on-premises and cloud environments arises. These tools should provide a unified view of all infrastructure components, regardless of their location, enabling centralized management and analysis.

- Serverless Monitoring: The rise of serverless computing brings new challenges for monitoring. Serverless functions are ephemeral and can scale dynamically, making it difficult to track their performance and identify issues. Specialized monitoring tools are emerging to address these challenges, providing insights into function execution times, resource consumption, and error rates.

Role of Artificial Intelligence and Machine Learning

AI and ML are transforming various industries, and remote monitoring is no exception. These technologies can enhance the capabilities of monitoring tools, enabling them to analyze vast amounts of data, detect anomalies, and predict potential issues before they occur.

- Anomaly Detection: AI algorithms can analyze historical data and identify patterns that deviate from normal behavior. This helps identify potential issues early on, before they impact system performance. For example, AI can detect sudden spikes in CPU usage or network traffic that might indicate a security breach or resource bottleneck.

- Predictive Maintenance: AI-powered monitoring tools can predict potential failures based on historical data and real-time system metrics. This allows IT teams to proactively address issues before they cause downtime or performance degradation. For example, an AI model might predict a hard drive failure based on its temperature, read/write speeds, and other metrics.

- Automated Incident Response: AI can automate the process of incident response by identifying and classifying alerts, prioritizing critical issues, and even taking automated actions to mitigate the impact of incidents. This reduces the workload of IT teams and improves response times.

Future of Remote Monitoring

Remote monitoring is evolving to become more proactive, intelligent, and integrated into modern IT environments.

- Proactive Monitoring: Future monitoring tools will shift from simply reacting to problems to proactively identifying and addressing potential issues before they occur. This will involve leveraging AI, machine learning, and predictive analytics to analyze data and anticipate potential problems.

- Intelligent Automation: Automation will play a significant role in the future of remote monitoring. AI-powered tools will automate routine tasks such as alert generation, incident response, and performance optimization. This will free up IT teams to focus on more strategic tasks.

- Integration with DevOps and SRE: Remote monitoring tools will be seamlessly integrated with DevOps and SRE practices. They will provide insights into application performance, infrastructure health, and security, enabling teams to optimize their workflows and improve overall system reliability.

Ending Remarks

In today’s dynamic IT landscape, effective remote monitoring is crucial for maintaining the reliability and security of Linux systems. By utilizing the powerful features of Linux remote monitoring tools, administrators can gain valuable insights, optimize performance, and ensure the smooth operation of their infrastructure. From real-time data collection and analysis to proactive alert notifications, these tools empower you to stay ahead of potential issues and ensure the seamless functioning of your Linux environment.