OS systems, the invisible foundation upon which our digital world operates, play a critical role in managing the complex interactions between hardware and software. From the familiar Windows and macOS interfaces on our desktops to the intricate systems powering smartphones and servers, operating systems provide the essential framework for all our digital experiences.

Table of Contents

The core functions of an OS system encompass memory management, process control, file organization, and I/O handling. These functions ensure smooth operation, resource allocation, and secure execution of applications, ultimately allowing us to work, play, and connect seamlessly.

Introduction to Operating Systems: Os System

An operating system (OS) acts as the intermediary between a computer’s hardware and the software applications that users run. It’s the foundation upon which everything else operates. Think of it as the conductor of an orchestra, ensuring all the instruments (hardware components) work together harmoniously to create a beautiful performance (the software applications you use).

Common Operating Systems

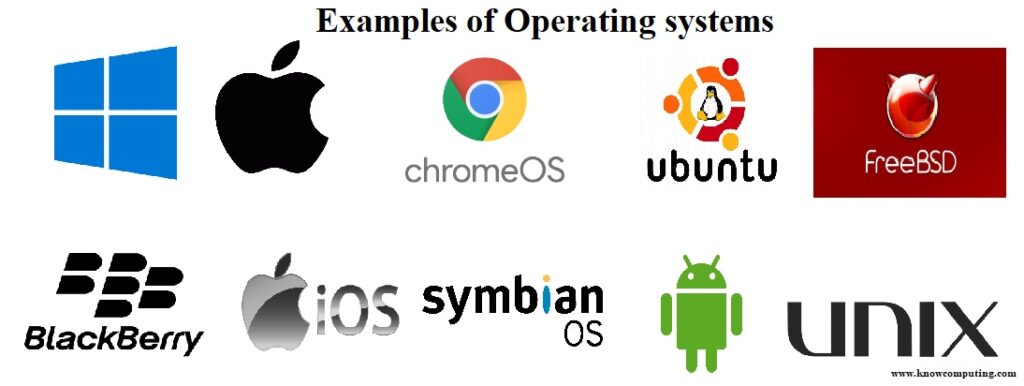

Operating systems are ubiquitous, powering everything from smartphones to supercomputers. Here are some prominent examples:

- Windows: Developed by Microsoft, Windows is the most widely used desktop operating system globally. It’s known for its user-friendly interface and vast software compatibility.

- macOS: Apple’s macOS is renowned for its sleek design, intuitive interface, and tight integration with Apple’s ecosystem.

- Linux: Linux is an open-source operating system known for its flexibility, stability, and power. It’s popular among developers and system administrators.

Core Functions of an Operating System

Operating systems perform crucial tasks to manage the computer’s resources effectively. These core functions include:

- Memory Management: The OS allocates and manages the computer’s memory, ensuring that different programs can access and use it efficiently without conflicting with each other.

- Process Management: The OS manages the execution of multiple programs simultaneously, switching between them rapidly to create the illusion of parallel processing.

- File Management: The OS provides a hierarchical file system for organizing and storing data on the computer’s storage devices. It enables users to create, delete, access, and modify files easily.

- I/O Handling: The OS manages communication between the computer and its peripherals, such as keyboards, mice, printers, and network devices.

Operating System Architecture

The operating system’s architecture defines its internal structure and how its various components interact to manage system resources. This architecture is designed to provide a robust and efficient platform for running applications. The core of this architecture is the kernel, a software layer that acts as the intermediary between the hardware and user applications.

Kernel Components, Os system

The kernel is the heart of the operating system, responsible for managing the system’s resources and providing a platform for applications to run. It is a complex piece of software, typically composed of several essential components:

- Process Manager: The process manager is responsible for managing the execution of processes, including creating, scheduling, and terminating them. It determines which process gets to use the CPU at any given time, ensuring fair and efficient allocation of processing power.

- Memory Manager: The memory manager handles the allocation and deallocation of memory to processes. It ensures that processes have enough memory to run without interfering with each other, preventing conflicts and memory leaks.

- File System Manager: The file system manager manages the organization and storage of files on the system. It provides a hierarchical structure for files and directories, allowing users to easily access and manage their data.

- Device Drivers: Device drivers act as the interface between the kernel and hardware devices, such as hard drives, network cards, and printers. They translate commands from the kernel into instructions that the hardware can understand, enabling the operating system to control and utilize these devices.

- Interrupt Handler: The interrupt handler manages the responses to interrupts generated by hardware devices. It allows the operating system to react to events, such as data arrival from a network card or a disk read completion, ensuring timely and efficient system operation.

- System Call Interface: The system call interface provides a way for user applications to interact with the kernel. Applications use system calls to request services from the kernel, such as reading and writing files, creating processes, or accessing hardware devices.

Kernel Functions

The kernel’s role extends beyond simply managing system resources. It performs a wide range of critical functions, including:

- Process Scheduling: The kernel uses a scheduling algorithm to determine which process should run next. Different algorithms exist, each with its own strengths and weaknesses, balancing factors like fairness, responsiveness, and throughput. The goal is to ensure that all processes get a fair share of CPU time, while also prioritizing important tasks.

- Memory Allocation: The kernel manages the allocation of memory to processes, ensuring that each process has enough memory to operate correctly. This includes allocating physical memory, virtual memory, and managing memory fragmentation to optimize resource utilization.

- Device Drivers: Device drivers are essential for communication between the kernel and hardware devices. They translate commands from the kernel into instructions that the device can understand, allowing the operating system to control and utilize hardware resources.

- File System Management: The kernel manages the organization and storage of files on the system, providing a consistent and efficient way for users to access and manage their data. This includes managing file permissions, directories, and file system metadata.

- Security and Protection: The kernel plays a crucial role in protecting the system from unauthorized access and malicious attacks. It enforces security policies, manages user accounts, and controls access to system resources, ensuring the integrity and confidentiality of the system.

- Inter-Process Communication: The kernel provides mechanisms for processes to communicate with each other, enabling them to share data and coordinate their activities. This is essential for complex applications that involve multiple cooperating processes.

Kernel and User Applications

The kernel acts as the intermediary between user applications and the hardware. Applications run in user space, while the kernel runs in kernel space. This separation is crucial for security and stability. User applications cannot directly access hardware or modify the kernel, preventing malicious code from causing system-wide damage.

Applications interact with the kernel through system calls, requesting services like file access, process creation, or network communication. The kernel verifies these requests, ensures they are legitimate, and then performs the requested action. This interaction ensures that applications operate within the boundaries set by the operating system, maintaining system stability and security.

Process Management

Process management is a fundamental aspect of operating systems, responsible for overseeing the execution of programs and managing their resources. It encompasses various tasks, including process creation, execution, termination, scheduling, and communication.

Process Lifecycle

The process lifecycle describes the stages a process undergoes from its creation to its termination.

- Creation: A process begins its life when a program is loaded into memory and the operating system allocates resources, such as memory space, CPU time, and file descriptors. The process is then assigned a unique identifier, known as the process ID (PID), for tracking and management.

- Execution: Once created, the process enters the execution state, where it actively uses the CPU to execute its instructions. The process cycles through different states during its execution, including running, ready, and waiting.

- Termination: A process ends its life when it completes its execution or is terminated by the operating system or a user. Termination involves releasing resources, closing files, and removing the process from the system’s process table.

Process Scheduling

Process scheduling is the mechanism by which the operating system determines which process should be executed at any given time. This is crucial for ensuring fair resource allocation and maximizing system efficiency.

- Scheduling Algorithms: There are various scheduling algorithms, each with its own strengths and weaknesses, designed to address different system requirements. Some common algorithms include:

- First-Come, First-Served (FCFS): Processes are executed in the order they arrive in the ready queue. This is a simple algorithm but can lead to long wait times for short processes.

- Shortest Job First (SJF): The process with the shortest estimated execution time is selected for execution. This algorithm minimizes the average waiting time but requires accurate estimation of execution times.

- Priority Scheduling: Processes are assigned priorities, and the process with the highest priority is executed first. This can be useful for real-time applications or processes with critical requirements but can lead to starvation of low-priority processes.

- Round-Robin: Each process is given a fixed time slice, and the CPU is switched between processes in a circular manner. This algorithm provides fair CPU allocation and prevents starvation but can result in increased context switching overhead.

- Multilevel Feedback Queue: Processes are assigned to different queues with different priorities. Processes can move between queues based on their behavior, such as CPU bursts or waiting times. This algorithm attempts to balance fairness and efficiency.

Process Synchronization and Communication

Process synchronization and communication are essential for coordinating the activities of multiple processes that share resources or need to interact with each other.

- Synchronization: Synchronization ensures that processes access shared resources in a controlled manner, preventing race conditions and data inconsistencies. Common synchronization mechanisms include semaphores, mutexes, and monitors.

- Communication: Processes need to communicate with each other to exchange data or coordinate their actions. Communication mechanisms include pipes, message queues, shared memory, and sockets.

Memory Management

Memory management is a crucial aspect of operating systems, responsible for allocating and managing the system’s memory resources. It ensures that different processes can access and use memory efficiently, preventing conflicts and maximizing resource utilization.

Memory Allocation Techniques

Memory allocation techniques determine how the system allocates memory to different processes. Two common techniques are paging and segmentation.

- Paging divides the physical memory into fixed-size units called frames, and the logical memory of a process into equal-sized units called pages. When a process needs to access a page, it is loaded into a frame in physical memory. This allows for non-contiguous memory allocation, where pages of a process can be scattered across physical memory.

- Segmentation divides the logical memory of a process into variable-sized segments, each representing a logical unit of the program, such as code, data, or stack. Segments are allocated in contiguous blocks of physical memory. This technique allows for better memory organization and protection, as each segment can have different access rights.

Virtual Memory

Virtual memory is a technique that allows a process to access more memory than physically available. It creates the illusion of a larger memory space by using a combination of physical memory and secondary storage (e.g., hard disk). When a process requests a memory location, the operating system checks if it is already in physical memory. If not, it retrieves the required page from secondary storage and loads it into a free frame in physical memory. This process is called page swapping.

- Virtual memory provides several benefits, including:

- Increased process size: Processes can be larger than the physical memory, enabling the execution of larger programs.

- Multiprogramming efficiency: Multiple processes can run concurrently, even if their combined memory requirements exceed the physical memory.

- Improved resource utilization: Physical memory can be shared efficiently among multiple processes, reducing the need for large amounts of physical memory.

Memory Management Challenges

Memory management faces several challenges, including:

- Fragmentation: Over time, memory allocation and deallocation can lead to fragmentation, where free memory is scattered in small, unusable chunks. This reduces the efficiency of memory allocation, as large processes may not be able to find contiguous space to allocate.

- Memory leaks: Memory leaks occur when a process allocates memory but fails to release it when it is no longer needed. This can lead to a gradual depletion of available memory, eventually causing system instability.

File Management

File management is a crucial aspect of operating systems, responsible for organizing and accessing data stored on the system. It provides mechanisms for creating, deleting, reading, and writing files, as well as managing directories and file system structures.

File System Hierarchy and File Organization

File systems organize files and directories in a hierarchical structure, resembling an inverted tree. The topmost level is known as the root directory, which branches out into subdirectories, each containing files and potentially further subdirectories. This hierarchical structure provides a systematic way to organize and access files, making it easier to locate and manage data.

File Access Methods

Operating systems offer various methods for accessing files, each with its own characteristics and suitability for different applications.

Sequential Access

Sequential access methods read or write data in a linear fashion, starting from the beginning of the file and proceeding sequentially. This method is simple to implement and suitable for applications where data is processed in a linear order, such as text editors or log files.

Direct Access

Direct access methods allow random access to any part of the file without having to read through preceding data. This method is efficient for applications that require frequent random access to data, such as databases or image editing software.

Indexed Sequential Access

Indexed sequential access methods combine the advantages of sequential and direct access. They allow sequential access for processing data in order, while also providing an index for direct access to specific records. This method is suitable for applications that require both sequential and random access to data, such as transaction processing systems.

File System Utilities

File system utilities are tools provided by operating systems to manage files and directories. These utilities facilitate various operations, including:

Listing and Displaying Files

The ‘ls’ command in Unix-like systems, for example, lists files and directories within a specified directory. This allows users to view the contents of a directory and identify specific files.

Creating and Deleting Files and Directories

Utilities like ‘mkdir’ and ‘rmdir’ create and delete directories, respectively, while commands like ‘touch’ and ‘rm’ create and delete files. These utilities enable users to manage the file system structure and organize data effectively.

Copying and Moving Files

Commands like ‘cp’ and ‘mv’ allow users to copy and move files between directories or to different locations within the file system. This facilitates data transfer and organization.

Renaming Files and Directories

The ‘mv’ command can also be used to rename files and directories by specifying a new name. This allows users to change file names or directory names for better organization or identification.

Searching for Files

Utilities like ‘find’ allow users to search for files based on specific criteria, such as file name, size, or modification date. This helps users locate specific files within the file system.

Permissions and Access Control

File system utilities also provide mechanisms for managing permissions and access control. These mechanisms allow users to control who has access to specific files and what actions they can perform on them. This ensures data security and integrity.

Input/Output Management

Input/Output (I/O) management is a crucial aspect of operating systems, responsible for facilitating communication between the system and external devices. This involves managing data transfer between the CPU, memory, and various peripherals such as keyboards, displays, hard drives, and network interfaces.

Device Drivers

Device drivers are specialized software modules that act as intermediaries between the operating system and hardware devices. They provide a standardized interface for the operating system to interact with different types of hardware, regardless of their underlying complexities.

- Role of Device Drivers: Device drivers translate high-level I/O requests from the operating system into low-level commands that the specific hardware device understands. They handle device-specific operations, such as initializing the device, controlling data flow, and managing error handling.

- Benefits of Device Drivers: Device drivers enable the operating system to interact with a wide range of hardware devices without needing to know the specific details of each device. They also provide a layer of abstraction, simplifying the development of applications and reducing the burden on the operating system.

- Examples of Device Drivers: Examples include drivers for graphics cards, network adapters, sound cards, and storage devices.

I/O Techniques

Operating systems employ various techniques to manage I/O operations efficiently. Two common methods are interrupt-driven I/O and Direct Memory Access (DMA).

- Interrupt-Driven I/O: In this technique, the CPU initiates an I/O operation and then continues with other tasks. The device controller signals the CPU via an interrupt when the I/O operation is complete. This allows the CPU to perform other tasks while waiting for the I/O operation to finish.

- Direct Memory Access (DMA): DMA is a more efficient technique that allows devices to directly transfer data to and from memory without involving the CPU. The device controller handles the data transfer, freeing up the CPU for other tasks. This significantly improves I/O performance, especially for large data transfers.

Challenges of Managing I/O Devices

Managing I/O devices effectively poses several challenges for operating systems.

- Data Transfer Efficiency: Ensuring efficient data transfer between devices and memory is crucial. Techniques like buffering, caching, and DMA help optimize data flow.

- Device Conflicts: Multiple devices may compete for access to shared resources, such as the bus or memory. The operating system needs to manage these conflicts to prevent data corruption and ensure fair resource allocation.

- Error Handling: I/O operations can encounter errors, such as device failures or data corruption. The operating system must handle these errors gracefully and provide appropriate feedback to the user.

- Device Compatibility: The operating system must be able to support a wide range of devices with varying functionalities and interfaces. Device drivers play a critical role in ensuring compatibility.

Security and Protection

Operating systems are the foundation of modern computing, managing system resources and providing a platform for applications. However, they are also vulnerable to various security threats that can compromise user data, system integrity, and overall functionality. Therefore, operating systems incorporate robust security mechanisms to protect against these threats and ensure secure operation.

Security Threats

Operating systems face a wide range of security threats that can exploit vulnerabilities in the system architecture, software, or user behavior. These threats can be categorized as follows:

- Malware: Malicious software designed to infiltrate and damage systems. This includes viruses, worms, trojans, and ransomware.

- Unauthorized Access: Gaining access to system resources or data without proper authorization. This can be achieved through brute force attacks, social engineering, or exploiting system vulnerabilities.

- Data Breaches: Compromising sensitive data, such as personal information, financial records, or intellectual property. This can result from malware infections, unauthorized access, or system failures.

- Denial-of-Service Attacks: Overloading system resources, preventing legitimate users from accessing services. These attacks can be launched through flooding servers with requests or exploiting vulnerabilities in system protocols.

- System Corruption: Modifying or deleting system files, compromising system stability and functionality. This can be caused by malware, unauthorized access, or hardware failures.

Protection Mechanisms

To mitigate these threats, operating systems employ various security mechanisms that protect system resources and user data:

Authentication

Authentication is the process of verifying the identity of a user or process attempting to access system resources. It ensures that only authorized entities can access sensitive information or perform critical operations. Common authentication methods include:

- Password-based authentication: Users provide a username and password to access the system. This method is widely used but vulnerable to brute force attacks and password theft.

- Multi-factor authentication (MFA): Requires users to provide multiple authentication factors, such as a password, a one-time code, or a biometric scan. This adds an extra layer of security by making it harder for unauthorized individuals to gain access.

- Biometric authentication: Uses unique biological characteristics, such as fingerprints, facial recognition, or iris scans, to verify user identity. This method is more secure than password-based authentication but can be expensive to implement.

Access Control

Access control mechanisms restrict access to system resources based on user identity and permissions. This prevents unauthorized users from accessing sensitive data or performing actions that could compromise system security. Common access control mechanisms include:

- Role-based access control (RBAC): Assigns users to specific roles, each with defined permissions. This simplifies access control management and ensures that users have only the permissions they need to perform their duties.

- Access control lists (ACLs): Lists that define the permissions granted to specific users or groups for each resource. This allows fine-grained control over access to individual files, directories, or other resources.

- Mandatory access control (MAC): Enforces strict access control policies based on security labels assigned to users and resources. This is typically used in highly secure environments, such as government or military systems.

Security Updates and Patches

Operating system vendors continuously release security updates and patches to address vulnerabilities discovered in their software. These updates fix bugs, patch security holes, and improve system security. It is crucial for users to install these updates promptly to protect their systems from known vulnerabilities.

Security Best Practices

- Use strong passwords: Choose complex passwords that are difficult to guess and avoid using the same password for multiple accounts.

- Enable multi-factor authentication: Use MFA whenever possible to add an extra layer of security to your accounts.

- Keep your software up-to-date: Install security updates and patches promptly to address known vulnerabilities.

- Be cautious of suspicious emails and links: Avoid clicking on links or opening attachments from unknown senders.

- Use antivirus software: Install and regularly update antivirus software to protect your system from malware.

- Be aware of phishing attacks: Phishing attacks attempt to trick users into revealing sensitive information. Be cautious of emails or websites that ask for personal information or login credentials.

- Backup your data: Regularly back up your important data to protect against data loss due to hardware failures or malware attacks.

Networking

The operating system plays a vital role in enabling and managing network communication within a computer system. It provides the necessary framework and tools for applications to interact with the network, send and receive data, and connect with other devices.

Network Protocols

Network protocols define the rules and standards that govern communication between devices on a network. They ensure that data is transmitted and received correctly, regardless of the underlying hardware or software. Operating systems typically support a wide range of network protocols, but some of the most common and widely used protocols include TCP/IP and UDP.

- TCP/IP (Transmission Control Protocol/Internet Protocol) is a suite of protocols that forms the foundation of the internet. TCP provides reliable, connection-oriented communication, ensuring that data is delivered in the correct order and without errors. It is often used for applications that require high reliability, such as web browsing, email, and file transfers.

- UDP (User Datagram Protocol) is a connectionless protocol that offers faster communication but does not guarantee data delivery. It is commonly used for applications that prioritize speed over reliability, such as streaming media, online gaming, and DNS queries.

Network Address Translation

Network Address Translation (NAT) is a technique used to conserve IP addresses and enhance security. It allows multiple devices on a private network to share a single public IP address. This is particularly useful for home networks and small businesses, where the number of available public IP addresses is limited.

- When a device on a private network attempts to access a resource on the internet, the NAT router translates the private IP address of the device to its own public IP address. This allows the device to communicate with the external network while hiding its private IP address.

- NAT also provides a layer of security by preventing external devices from directly accessing devices on the private network. Only traffic initiated from within the private network can pass through the NAT router.

Routing

Routing is the process of determining the optimal path for data packets to travel from a source to a destination on a network. Routers are specialized devices that perform routing by examining the destination address of incoming packets and forwarding them along the appropriate path.

- Routers use routing tables, which contain information about network addresses and their corresponding paths, to make routing decisions. These tables can be statically configured or dynamically updated based on network conditions.

- Routing protocols, such as RIP (Routing Information Protocol) and OSPF (Open Shortest Path First), allow routers to exchange routing information and maintain up-to-date routing tables.

Operating System Evolution

Operating systems have undergone a significant evolution since their inception, adapting to changing hardware capabilities and user demands. Understanding this evolution provides valuable insights into the design principles and future directions of operating systems.

Major Milestones in Operating System History

The history of operating systems is marked by several key milestones that have shaped their development and capabilities.

- Early Batch Systems (1940s-1950s): These systems were designed to run programs in batches, maximizing hardware utilization. Programs were submitted on punched cards or magnetic tape, processed sequentially, and results were returned later. Examples include IBM’s 701 and UNIVAC I.

- Simple Multiprogramming Systems (1960s): Introduced the concept of running multiple programs concurrently by switching between them rapidly. This improved system efficiency by keeping the CPU busy while waiting for I/O operations. Examples include IBM’s OS/360 and Burroughs MCP.

- Time-Sharing Systems (1960s-1970s): Enabled multiple users to interact with the system simultaneously, providing interactive access to resources. Examples include Multics, Unix, and early versions of Windows.

- Personal Computers (1970s-present): The rise of personal computers led to the development of user-friendly operating systems designed for individual use. Examples include CP/M, MS-DOS, and early versions of Apple macOS.

- Graphical User Interfaces (1980s-present): Introduced graphical interfaces, making operating systems more intuitive and accessible to a wider audience. Examples include Apple’s Macintosh operating system and Microsoft Windows.

- Network Operating Systems (1980s-present): Enabled computers to connect and share resources over a network. Examples include Novell NetWare, Microsoft Windows NT, and Unix-based systems like Linux.

- Distributed Operating Systems (1990s-present): Manage resources across multiple computers in a network, providing greater scalability and fault tolerance. Examples include Sun Microsystems’ Solaris and Microsoft Windows Server.

- Mobile Operating Systems (2000s-present): Designed for mobile devices with limited resources and touch-based interfaces. Examples include Apple’s iOS, Google’s Android, and Microsoft’s Windows Phone.

- Cloud Operating Systems (2010s-present): Offer computing resources and services over the internet, providing flexibility and scalability. Examples include Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP).

Comparison of Operating System Generations

Operating systems have evolved through distinct generations, each characterized by specific features and functionalities.

| Generation | Features | Examples |

|---|---|---|

| First Generation (1940s-1950s) | Batch processing, machine language programming, limited memory, no multitasking. | IBM 701, UNIVAC I |

| Second Generation (1960s) | Multiprogramming, assembly language programming, improved memory management, rudimentary I/O handling. | IBM OS/360, Burroughs MCP |

| Third Generation (1970s) | Time-sharing, high-level programming languages, advanced memory management, sophisticated I/O handling, early file systems. | Multics, Unix, early Windows |

| Fourth Generation (1980s-present) | Graphical user interfaces (GUIs), networking capabilities, distributed systems, improved security and protection mechanisms. | Apple macOS, Microsoft Windows, Unix-based systems |

| Fifth Generation (1990s-present) | Object-oriented programming, distributed computing, parallel processing, mobile operating systems, cloud computing. | Solaris, Windows Server, iOS, Android, AWS, Azure, GCP |

Future Trends in Operating System Development

Operating systems are continuously evolving to meet the demands of emerging technologies and user expectations.

- Artificial Intelligence (AI) Integration: Operating systems will leverage AI for tasks like resource optimization, security threat detection, and personalized user experiences.

- Edge Computing: With the proliferation of IoT devices, operating systems will need to support edge computing, enabling processing and data management closer to the source.

- Quantum Computing: As quantum computing matures, operating systems will need to adapt to its unique capabilities, including parallel processing and enhanced security.

- Augmented and Virtual Reality (AR/VR): Operating systems will need to support AR/VR applications, providing immersive user experiences and new interaction paradigms.

- Increased Security and Privacy: With growing cyber threats, operating systems will prioritize security and privacy, implementing advanced protection mechanisms and data encryption.

Case Studies

Operating systems are the foundation of our digital world, powering everything from personal computers to smartphones and even complex server infrastructure. Understanding the different types of operating systems and their unique characteristics is crucial for anyone working with computers.

Comparison of Popular Operating Systems

To better understand the strengths and weaknesses of different operating systems, let’s examine three popular choices: Windows, macOS, and Linux.

| Operating System | Key Features | Advantages | Disadvantages |

|---|---|---|---|

| Windows | User-friendly interface, wide hardware compatibility, extensive software library, strong gaming support | Widely used, familiar to many users, vast software ecosystem, powerful gaming performance | Higher cost, potential for security vulnerabilities, resource-intensive, can be prone to bloatware |

| macOS | Elegant design, seamless integration with Apple devices, robust security features, intuitive user experience | Excellent security, strong performance, user-friendly interface, seamless integration with Apple ecosystem | Limited hardware compatibility, higher cost, smaller software library compared to Windows |

| Linux | Open-source, highly customizable, versatile, excellent for server environments, strong security focus | Free and open-source, highly customizable, powerful command-line interface, excellent for server applications, generally more secure | Steeper learning curve, less user-friendly interface compared to Windows and macOS, limited software availability for some applications |

Real-World Scenarios

The choice of operating system often depends on the specific needs and preferences of the user or organization.

* Windows: Widely used in personal computers, offices, and gaming.

* macOS: Preferred by creative professionals, graphic designers, and users within the Apple ecosystem.

* Linux: Commonly used in servers, embedded systems, and by developers due to its flexibility and open-source nature.

Closure

As technology continues to evolve, OS systems are adapting to meet the demands of an increasingly interconnected world. From the rise of cloud computing and mobile devices to the increasing need for security and performance optimization, the future of operating systems promises exciting innovations that will shape the way we interact with technology for years to come.

An operating system is the foundation of any computer, providing the framework for all other software to run. It manages resources like memory, storage, and peripherals. For tasks involving media like video and audio, a specialized tool called a media encoder can be crucial.

These encoders optimize media files for different platforms and devices, ensuring smooth playback and efficient file sizes. Understanding how the OS interacts with these specialized tools is important for ensuring optimal media performance.